Table of Contents

The 2 AM alert. The all-hands war room. The scramble to identify root cause while customers are already affected. The post-mortem that reveals the warning signs were there all along, buried in a sea of noise.

Enterprise IT operations have operated in perpetual firefighting mode for too long. Traditional monitoring tools generate thousands of alerts daily, but they can't tell you which ones matter or predict which small anomaly will cascade into a major outage. The result? SLA breaches, revenue loss, and exhausted engineering teams constantly reacting to crises instead of preventing them.

The cost is staggering. A single hour of downtime for a mid-sized e-commerce platform can mean $300,000 in lost revenue. A critical application failure during peak business hours violates SLAs while simultaneously eroding customer trust and damaging brand reputation in ways that persist long after systems are restored.

This reactive approach to incident management is no longer sustainable. Cloud-native architectures, microservices proliferation, and distributed systems have created operational complexity that human teams simply cannot manage through manual processes and static threshold alerts. The future belongs to organizations that prevent fires rather than just fighting them faster.

This is where AIOps fundamentally changes the equation.

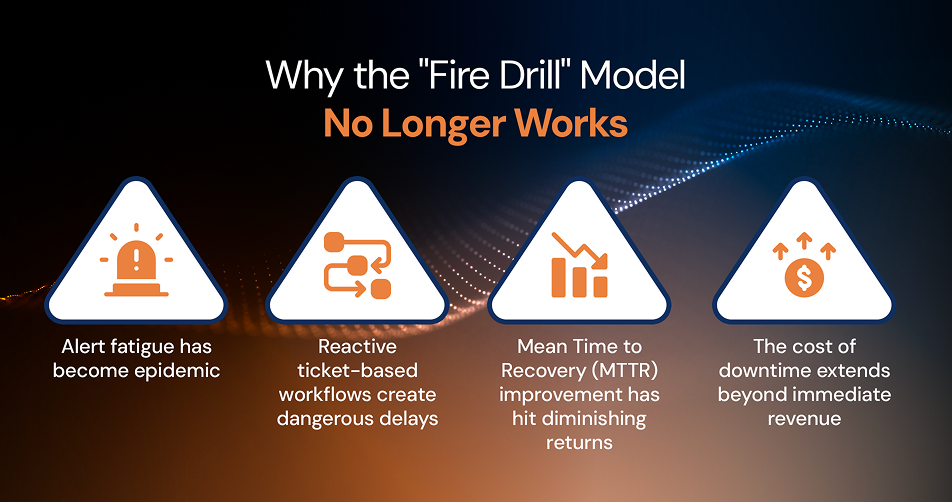

Why the "Fire Drill" Model No Longer Works

Modern enterprises operate in an environment of exponential complexity. A single customer transaction might touch 50+ microservices, each generating logs, metrics, and traces. Containerized applications spin up and down dynamically. Multi-cloud deployments create dependencies that traditional monitoring tools were never designed to track.

The consequences of this complexity are clear:

Alert fatigue has become epidemic. SRE teams receive hundreds or thousands of alerts daily, with 90% proving to be false positives or low-priority noise. Critical signals get lost in the chaos, and by the time human operators identify the real issue, customers are already experiencing degraded service.

Reactive ticket-based workflows create dangerous delays. Traditional ITSM processes (alert triggers ticket, ticket gets triaged, ticket gets assigned, engineer investigates) introduce 20-40 minute lags even when everything works perfectly. In cloud-native environments where issues can cascade in seconds, this model guarantees customer impact.

Mean Time to Recovery (MTTR) improvement has hit diminishing returns. Organizations have optimized their response processes extensively, yet they still measure success by how quickly they fix problems that should have been prevented entirely. A 10-minute MTTR still represents 10 minutes of SLA violation and potential revenue loss.

The cost of downtime extends beyond immediate revenue. SLA breaches trigger financial penalties, but the deeper costs (customer churn, competitive disadvantage, engineering opportunity cost) prove far more damaging. Every hour spent firefighting is an hour not spent innovating.

DBiz.ai's approach recognizes that incremental improvements to reactive processes can't solve a fundamental architecture problem. Better prediction enables better prevention.

What Predictive AIOps Actually Means

AIOps applies artificial intelligence and machine learning to IT operations, but the term has been diluted by vendors applying it to basic automation or alert consolidation. True predictive AIOps goes far deeper.

Predictive AIOps ingests massive volumes of operational telemetry. This includes logs, metrics, distributed traces, event streams, configuration changes, and deployment metadata. ML models then analyze this data to identify patterns that precede incidents. Rather than waiting for a threshold breach to trigger an alert, the system detects subtle anomalies and behavioral shifts that indicate degradation before it impacts users.

The technical foundation includes several critical capabilities:

Continuous data normalization and enrichment across heterogeneous sources. Your Kubernetes metrics, application logs, database performance data, and network telemetry exist in different formats with different timestamps and semantics. Predictive AIOps platforms normalize this data into a unified operational graph that reveals relationships traditional tools miss.

Multi-dimensional anomaly detection that understands normal is contextual. CPU at 80% might be normal for your payment processing service during business hours but anomalous at 3 AM. ML models learn these contextual patterns and flag deviations that static thresholds would ignore or generate false alarms about.

Cross-system correlation to identify root cause precursors. The database slowdown, the slight uptick in API latency, the marginal increase in error rates. Individually, each looks minor. Together, they indicate an impending cascade failure. Predictive models connect these dots across service boundaries, cloud providers, and infrastructure layers.

Time-series forecasting for performance degradation. Predictive models project when memory utilization will reach critical thresholds based on current trends, giving teams hours or days of advance warning to investigate and remediate. This approach provides far more value than simply alerting when utilization crosses 90%.

DBiz.ai's AI and data engineering services build these capabilities into operational workflows, ensuring predictions are actionable and aligned with business priorities.

How DBiz Shifts Operations from Reactive Patching to Proactive Prevention

Implementing aiops for incident management requires more than deploying a new monitoring tool. It demands a fundamental rethinking of how operational data flows, how incidents are prioritized, and how teams respond to predictive signals.

DBiz.ai's methodology centers on several key principles:

Telemetry normalization creates a single source of operational truth. We aggregate data across application instrumentation, infrastructure monitoring, cloud provider APIs, CI/CD pipelines, and business metrics into a unified data lake. This enables correlation analysis that siloed tools cannot perform.

ML-based event correlation eliminates noise before it reaches human operators. Intelligent correlation groups related signals, suppresses redundant alerts, and surfaces only the incidents that require human attention. This reduces alert volume by 70-80% while increasing signal quality, rather than forwarding every anomaly to the on-call engineer.

Early-warning predictive models run continuously against live telemetry. These models identify patterns associated with past incidents. Disk fill rates that historically precede database crashes, memory leak signatures, gradual performance degradation curves. The system alerts teams days or hours before customer impact occurs.

Business-impact prioritization maps technical events to SLA and revenue risk. Not all incidents are equal. A database replica failure during low-traffic hours is very different from a payment gateway latency spike during peak sales. Predictive AIOps platforms score incidents based on their potential business impact, ensuring teams focus on what matters most.

Safe automation enables self-healing for well-understood scenarios. When the system detects a known degradation pattern with high confidence, automated remediation playbooks can execute without human intervention. Restarting a service, scaling infrastructure, clearing a cache. All while logging the action for review.

Continuous feedback loops improve model accuracy. Every prediction, every incident, every remediation action feeds back into the ML models. The system learns which early-warning signals proved accurate, which were false positives, and refines its predictions accordingly.

DBiz.ai's intelligent IT operations practice delivers this operational transformation through both technology implementation and a complete shift in how teams interact with and manage complex systems.

The SLA Impact: Moving Beyond Downtime Recovery to Downtime Avoidance

The business case for predictive AIOps centers on a fundamental metric shift: reducing time to detection (TTD) to zero or even negative values.

Traditional monitoring operates on a reactive timeline. An issue occurs, monitoring detects it (TTD), teams diagnose it (time to identify), and then remediate it (time to resolve). Even with excellent processes, this sequence guarantees some period of customer impact.

Predictive AIOps compresses or eliminates TTD by identifying issues before they manifest as outages. The business outcomes are transformative.

Organizations implementing mature predictive AIOps capabilities report 40% fewer severity-1 incidents as teams remediate degradation before it escalates. A memory leak detected and addressed during normal business hours never becomes a 3 AM production outage.

SLA breach rates drop by 60% or more when teams can intervene proactively. Early warning on capacity constraints prevents performance degradation. Predictive change-risk assessment catches problematic deployments before they reach production.

Detection speed improves by 30% even for incidents that do occur because correlation models immediately surface the relevant signals rather than requiring engineers to manually sift through dashboards and log files.

Predictive capacity planning prevents a different class of incidents entirely. ML models forecast resource exhaustion weeks in advance, enabling teams to scale infrastructure during planned maintenance windows rather than emergency responses.

Change-risk assessment applies predictive models to deployment pipelines. Before code reaches production, AIOps platforms analyze the change against historical incident data, similar code modifications, and current system state to flag high-risk deployments for additional review.

These improvements directly protect revenue. For every percentage point reduction in SLA breaches, enterprises avoid contractual penalties, preserve customer relationships, and maintain competitive positioning. The ROI calculation is straightforward: predictive prevention costs far less than reactive remediation.

DBiz.ai's client outcomes demonstrate these metrics consistently across industries. Financial services organizations prevent transaction processing delays, logistics companies maintain real-time tracking accuracy, and e-commerce platforms protect revenue during peak shopping periods.

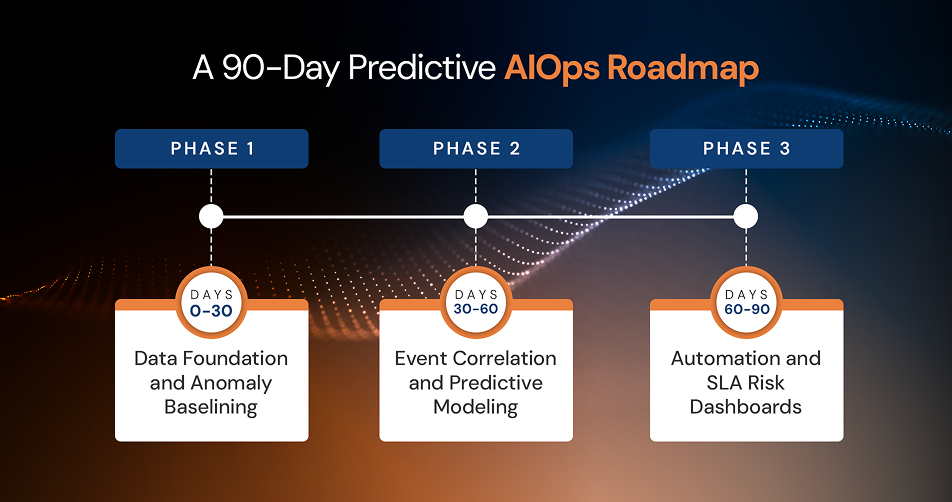

A 90-Day Predictive AIOps Roadmap

Implementing predictive AIOps requires focus rather than a multi-year transformation program. DBiz.ai's accelerated approach delivers measurable value within 90 days through an outcome-driven roadmap.

Phase 1 (Days 0-30): Data Foundation and Anomaly Baselining

The first month establishes the telemetry infrastructure. We identify the 5-10 most business-critical services and ensure comprehensive instrumentation across logs, metrics, traces, and business KPIs. Data flows into a centralized platform where normalization and enrichment occur.

Simultaneously, ML models begin learning normal operational patterns. What does healthy look like for each service across different times of day, days of week, and load conditions? This baseline becomes the foundation for anomaly detection.

Deliverable: Unified operational data lake with 30 days of baseline learning for priority services.

Phase 2 (Days 30-60): Event Correlation and Predictive Modeling

With baseline established, we activate correlation engines that group related anomalies and identify cross-service patterns. Historical incident data trains models to recognize the signatures of past problems.

Predictive models deploy for the most common incident types: resource exhaustion, performance degradation, dependency failures. The system begins generating early-warning alerts for human review, with feedback captured to refine accuracy.

Teams receive training on interpreting predictive signals and building response playbooks.

Deliverable: Active predictive models generating early-warning alerts with human-in-the-loop validation.

Phase 3 (Days 60-90): Automation and SLA Risk Dashboards

The final month activates safe automation for high-confidence scenarios. When the system detects a known pattern with >95% confidence, automated playbooks execute predefined remediation steps.

Business-aware dashboards visualize SLA risk in real time, showing not just current health but projected issues based on trend analysis. Leadership gains visibility into operational risk before it manifests as customer impact.

The feedback loop completes, with incident retrospectives feeding model improvements.

Deliverable: Production predictive AIOps platform with automation, SLA dashboards, and continuous improvement processes.

The Pilot Approach

Start with your top 5 business-critical services rather than attempting organization-wide deployment. This focused approach delivers rapid ROI, builds organizational confidence in predictive capabilities, and creates a template for expansion.

Contact DBiz.ai to design a pilot tailored to your specific operational challenges and SLA requirements.

Conclusion: The End of Reactive Operations

The "fire drill" approach to incident management (scrambling to respond after customers are already impacted) represents an outdated operational model that modern enterprises can no longer afford.

Predictive AIOps prevents incidents by detecting degradation before it cascades into outages. It protects SLAs by identifying risk before breaches occur. It frees engineering teams to focus on innovation rather than constant firefighting. The impact extends well beyond faster incident response.

The organizations that thrive in increasingly complex technical environments will be those that shift their focus. Measuring how effectively you prevent problems matters more than measuring how quickly you fix them. SLA protection becomes measurable, controllable, and engineered into operational workflows rather than hoped for through heroic manual efforts.

The technical foundation exists. ML models can reliably predict operational issues hours or days in advance. Automation frameworks can safely remediate known scenarios. Your organization needs to decide whether to adopt predictive operations before your competitors do.

Talk to DBiz.ai about building a predictive AIOps framework that protects your SLAs, reduces operational risk, and shifts your teams toward proactive prevention. The death of the fire drill starts with the first prediction.

Frequently Asked Questions

What is predictive AIOps?

Predictive AIOps applies artificial intelligence and machine learning to IT operations telemetry (logs, metrics, traces, events) to identify patterns that precede incidents. Predictive AIOps detects degradation signals hours or days in advance, enabling teams to remediate before customer impact, rather than alerting after problems occur.

How does AIOps reduce SLA breaches?

AIOps reduces SLA breaches by shifting the intervention point earlier in the failure timeline. Traditional monitoring detects issues after they manifest as outages. Predictive AIOps identifies warning signs (resource trends, performance degradation patterns, anomalous behavior) before they escalate into SLA violations, giving teams time to remediate during planned maintenance windows.

Can AIOps prevent incidents before downtime occurs?

Yes. Mature AIOps implementations prevent 40-60% of incidents that would otherwise occur by detecting and remediating degradation during its early stages. A memory leak caught trending toward critical levels can be addressed before it crashes the application. A capacity constraint identified weeks in advance can be resolved through planned scaling rather than emergency response.

What does a 90-day AIOps implementation look like?

A focused 90-day implementation follows three phases: Data foundation and baseline learning for critical services (Days 0-30), event correlation and predictive model activation with human validation (Days 30-60), and automation deployment plus SLA risk dashboards (Days 60-90). This delivers measurable value quickly while building toward comprehensive coverage.

How is predictive AIOps different from traditional monitoring?

Traditional monitoring uses static thresholds and reactive alerts. CPU exceeds 80%, disk space falls below 10%, error rate spikes above normal. These tools tell you a problem exists after it occurs. Predictive AIOps uses ML models to understand contextual baselines, detect subtle anomalies, correlate signals across systems, and forecast issues before they manifest as outages. Prevention versus reaction makes all the difference.